At Development, we are always trying to improve our processes and service – for authors and readers. In April 2015, we made some changes to our peer review process, which aimed at encouraging a more constructive approach to peer review. As we wrote in the Editorial announcing these changes, ‘there can be a tendency for a review to read like a “shopping list” of potential experiments … Instead, we believe that referees should focus on two key questions: how important is the work for the community, and how well do the data support the conclusions? … In other words, what are the necessary revisions, not the “nice-to-have”s?’ (Pourquié and Brown, 2015). Our new system has been in place for over a year now, and we have seen a change in the tone and nature of referee reports: in general, referees have embraced our new guidelines and we feel that this has had a positive effect – both in helping editors to take a decision, and in providing more streamlined feedback for authors. However, and as we recognised at the time, ‘…these changes are conservative compared with some of the more radical approaches in peer review that have been implemented and trialled elsewhere’ (Pourquié and Brown, 2015). At the beginning of 2016, and while reviewing various aspects of the Company's journals’ peer review processes and online functionalities, we therefore conducted a community survey to gauge your opinions on how we should further improve. Over 300 of you completed the survey (over 800 across all our journals), and we are very grateful for your detailed responses.

In the first part of the survey, we asked you to rank possible improvements or changes to the way we do peer review in order of importance (see Box 1). Perhaps unsurprisingly, you ranked the two statements that relate to speed of publication as the top two priorities. While our own research and author surveys suggest that our speeds are reasonably competitive compared with other journals, there are always improvements to be made and we are actively looking at ways in which we can accelerate the process. We also recognise that multiple rounds of review and revision can be frustrating for the author, so we do try to avoid these wherever possible – while still ensuring the highest standards of peer review. Moreover, such efforts are rarely made in vain: we make a strong commitment to papers at first decision, accepting over 95% of manuscripts where we invite a revision.

Our January survey asked:

‘Unbiased, independent peer review is at the heart of our publishing decisions but there is always room for improvement and we are open to experimentation and change based on the needs of our community. Please rank the following in order of importance to you.’

Your responses ranked the statements in the order below – from most to least important.

Improve speed from submission to first decision

Reduce number of rounds of review and revision

Collaborative peer review trial (inter-referee discussion before a decision is made)

Name of the Editor who handled the paper published with the article

Double-blind peer review trial (author identity withheld from referees)

Network for transferring referee reports between journals in related fields

Post the peer review reports alongside the published articles

‘Open’ peer review trial (referee identity known to authors but not made public)

Post-publication commenting

Speed aside, you ranked ‘collaborative peer review trial’ as the most important potential innovation – in fact, you rated this almost as important as reducing the number of rounds of review. This chimed with regular feedback we receive from the community, who, as authors, have found such models of peer review helpful. The idea is that, once all the reports on a paper have been returned, the editor shares these among the referees, asking for further feedback that might clarify the decision. This can help to resolve differences between referees, identify unnecessary or unreasonable requests, or – conversely – highlight valid concerns raised by one referee but overlooked by the others. Many journals, Development included, have been doing this informally with a subset of difficult cases for many years and it can prove invaluable in helping editors to make the right decision on a paper.

Given your enthusiasm for such a model, we have carefully assessed a number of potential models, including those already in place at other journals. These range from ‘cross-referee commenting’ as implemented by The EMBO Journal (Pulverer, 2010) and others, to the more active discussions embraced by eLife (Schekman et al., 2013). Bearing in mind the need to balance gathering additional feedback against the consequent effects on speed to decision, as well as the fact that some papers will benefit from further input more than others, we believe that a cross-referee commenting model is most appropriate for Development.

For all research papers submitted after mid-September, the full set of referee reports (minus any confidential comments) will be shared among all the referees. These may be accompanied by specific requests for feedback from the Editor. Referees will then be given two working days to respond before the Editor takes a decision – thus minimising any impact on speed (although the Editor may choose to wait for input in cases where the decision is particularly difficult or borderline). We anticipate that in many cases, feedback will either not be received or will not alter the Editor's decision. For a minority of papers, however, we believe that this process will significantly aid decision-making and help the authors to move forwards with the paper – whether the decision is positive or negative. And, because it is not always possible to predict what will come out of such a process, we think it important to implement this as standard across all papers. We know that our referees already do a great job in helping authors to improve the papers we review, and we are hugely grateful for their efforts. We hope they (you!) will engage in this new development, and that authors will find it helpful. We will also continue to review other possible improvements to how we handle papers – bearing in mind your feedback from the survey.

The second part of the survey focused on how we present the work we publish (see Box 2). Once again, it seems that what matters most to you is not how the paper looks when it comes out, but how quickly it appears. Since mid 2015, we have been posting the author-accepted versions of manuscripts on our Advance Articles page before issue publication, minimising the time between acceptance and appearance of the work online. While there is still some delay (1-2 weeks) before online posting, this is primarily to allow us time to run our standard ethics checks on all papers – helping to ensure the integrity of the work we publish. Articles then typically appear in an issue around 6 weeks after acceptance: this reflects the time required to copy-edit, typeset and prepare the issue, although again we are working on ways of accelerating these processes.

Our January survey asked:

‘We recently rolled out a new-look website to make it easier for you to find and read content, but new features and functionality are being developed all the time. Which areas do you think should be our focus for 2016 and beyond? Please rank the following in order of importance to you.’

Your responses ranked the statements in the order below – from most to least important.

Improve speed from acceptance to online publication

Easier viewing of figures alongside the relevant text

Easier viewing of supplementary material including movies

Graphical abstracts (diagrammatical summaries of papers)

Publish final versions of articles one by one, gradually building an issue, rather than waiting for an issue to be complete before publication

Easier access to related articles, special issues and subject collections

Better text and datamining services

Annotation of article PDFs e.g. ReadCube

More community web content such as feeds from third-party bloggers

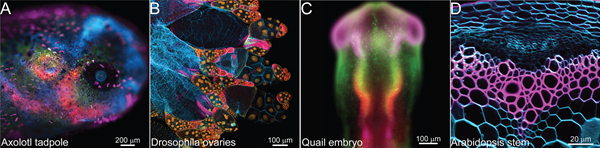

In terms of potential innovations to our online display, you ranked easier viewing of figures and better display of Supplementary Information, including movies, as the most important. This feedback fitted precisely with the top priorities on our own development wish-list. Improving online functionalities is a long-haul project, particularly given the need to work with external partners, to ensure that any new tools integrate seamlessly with our platform and to be confident that they will continue to serve their purpose long-term. However, we are making progress, particularly with Supplementary Information. We are now able to make the Supplementary Information available with the Advance Article version of the manuscript, meaning that readers do not have to wait until issue publication to view the Supplementary Information. We can also announce that we have partnered with Glencoe Software (glencoesoftware.com) for better display of movies. Once in place, this will mean that movies can be viewed directly from within the HTML version of the article. Given that movies are often an integral part of a developmental biology paper, we are delighted that we will finally be able to give them the prominence they deserve. Other innovations to our online display, aimed at better integration of text, figures, movies and data are in the pipeline.

As a journal, we are always looking for ways to improve the way we both handle and present your work. Along with other recent innovations – including co-submission to bioRxiv, integrated data deposition with Dryad, adoption of ORCiD for unambiguous author identification and promotion of the CRediT taxonomy for author contributions – we hope that these announcements will help authors to better disseminate and gain recognition for their research, and help readers to better access and utilise it. As always, we welcome your feedback and suggestions for future improvements.