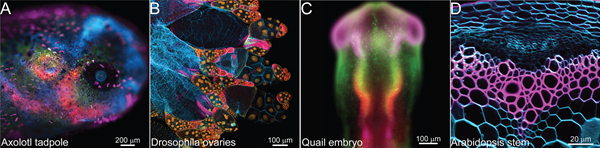

Advances in image analysis are set to revolutionise developmental biology, but to the non-specialist they may seem out of reach. In their new paper in Development, Thomas Naert, Soeren Lienkamp and colleagues set out to demystify the use of deep-learning methods and demonstrate how they can be used in disease modelling. To find out more about their route into deep-learning-based image analysis, we caught up with first author and postdoctoral researcher Thomas Naert, and his supervisor Soeren Lienkamp, Assistant Professor at the Institute of Anatomy of the University of Zurich.

Soeren (L) and Thomas (R) sitting at their mesoSPIM lightsheet microscope

Soeren, can you give us your scientific biography and the questions your lab is trying to answer?

SL: I studied Medicine in Freiburg in Germany and started research on Xenopus to earn the German MD degree. I then had the chance to spend a year in Japan looking at presynaptic proteins in C. elegans. After finishing Medical School, I continued doing basic science on Xenopus kidneys as a postdoc and clinical resident, and then later as a group leader in the Nephrology Department at University Freiburg Medical Center. In 2019, I became Assistant Professor at the Institute of Anatomy of the University of Zurich. My group is interested in how kidneys form and in the mechanisms behind genetic renal diseases. One major question is how a small number of transcription factors can control cell identity. Another focus is ciliopathies, including cystic kidney disease, and the general question of how epithelial tubules maintain their shape and function.

Thomas, how did you come to work in Soeren's lab and what drives your research today?

TN: During my PhD studies, I worked with Kris Vleminckx on generating cancer models using CRISPR in Xenopus tropicalis, and I decided I wanted to continue working with Xenopus during my postdoc. It is an extremely exciting time to work with Xenopus, because CRISPR has really unlocked the frog as a powerful model for human disease studies. I felt that Soeren's lab would be an environment that could foster my scientific curiosity in imaging and disease modelling. When I visited the lab, I became fascinated with the possibility of using the mesoSPIM light-sheet microscope that was available just down the hallway. Seeing some preliminary imaging of Xenopus tadpoles convinced me that I wanted to pursue these 3D imaging technologies. Further, working on polycystic kidney disease modelling still drives my research today. It is motivating to work on due to the large, unmet medical need for new treatments.

Can you give us the key results of the paper in a paragraph?

TN: In a sentence: ‘Deep learning for all’. With this paper we intend to demonstrate, using real-life user cases, the power of deep learning for image analysis in developmental biology and how it is within reach of wet-lab biologists with a coding background using freely available Fiji tools. We deployed these methods on disease models affecting a variety of different organs and imaging modalities. We also provide real-life examples to counter some common preconceptions about deep learning for image analysis. We hope our experiences will help people to access these powerful tools in their own research lines. Further, we also established several exciting new models for polycystic kidney disease in Xenopus, which we feel have specific benefits compared to existing models.

Your background is in disease modelling in the kidney. What prompted you to use deep-learning methods, such as convolutional neural networks (CNNs), in your research, and how did you get started?

SL: It started because we had a great collaboration with Olaf Ronneberger from the Computer Vision Group when I was still in Freiburg. He once mentioned that a new tool he had built (U-Net) was really good at detecting cells and other structures in images. I didn't realise the potential at first, but when we tried to segment embryonic kidneys in our own data, it became clear that the U-Net could recognise structures that didn't stand out by the staining alone, but identified them by their form and position. Olaf's team, and Özgün Çiçek in particular, were really helpful in introducing us to the technology. So, it started with the practical problem of how to automate measurements of kidney tubules, but when we grasped the potential, Thomas really took it to a much broader level and tried out so many more applications. It kept on performing really well.

Did you identify any key pitfalls for a wet-lab scientist in implementing CNNs for image analysis?

TN: I think the main challenge for most people would be actually setting up the deep-learning environment because this needs to run on a Linux computer. However, if you are a bit tech-savvy or can elicit some help from IT support in your institute, you can get it up and running quickly. After that, the graphical user interface of the Fiji plug-in really allows you to access the deep learning without having to write a single line of code. In order to demystify the training process, we also uploaded some instructional videos on our lab website, where you can follow along as I train and deploy a network segmenting embryos from the background.

When doing the research, did you have any particular result or eureka moment that has stuck with you?

TN: One of the first deep-learning networks I trained was the 2D-NephroNet, which segments out kidneys from Xenopus tadpoles across pathological states and developmental stages. Naively, we initially annotated hundreds of images, thinking that we would need a ton of training data for deep learning to work. So, we trained and, indeed, the network performed well. After that, we thought ‘let's reduce the training data to see how much data is enough’. As I reduced the training dataset down from 110, to 70, to 50, to 15, the network kept training and performing well. In the end, the final network was trained on only 15 images and was performing at human-level accuracy. It was really at that time that the potential of these deep-learning techniques hit me. Reducing the amount of training data required also reduces most of the initial time investment into using deep learning. What remains is a substantial gain in time when automating the analysis of your experiments.

And what about the flipside: any moments of frustration or despair?

TN: Sometimes networks don't immediately train as you expect. Usually, this is the consequence of smaller errors during the preparation of the training data or of inputting incorrect hyperparameters. Initially, when we were still training models on large datasets, this could mean that you lost 2 days of computation, which was time and resources you were not getting back. It was sometimes frustrating when I was excited to deploy a network that had been brewing for a couple of days, only to find that the segmentations were not even close. This became less of an issue later in the project as we realised that these long training times were not necessary and could be scaled down dramatically.

In this paper you use advanced imaging techniques and CRISPR/Cas9 gene editing, as well as deep-learning-based image analysis; which do you think will have the biggest impact in the development and disease modelling community?

TN: CRISPR/Cas9 has already heavily impacted the developmental biology community. It is a really powerful tool in Xenopus tropicalis for modelling human diseases and has many other applications. Further, I think new imaging and analysis technologies can only really impact the community if they are developed together hand-in-hand. Acquiring ever-larger and complex imaging datasets does not help the researcher make discoveries if the tools are not in place to make sense of the big-data tangle. On a broader scope, I am a firm believer that we are on the edge of a true AI revolution that is not only going to drastically alter the way developmental biologists design experiments, but will transform many parts of society as a whole.

What next for you after this paper?

TN: I want to continue using deep learning, possibly beyond computer vision, to push forward disease modelling in Xenopus. I think there are many interesting networks and architectures out there to explore! I really like that deep learning allows me to ask questions and design experiments in new and exciting ways.

Where will this story take the Lienkamp lab?

SL: This story has already seeded a number of projects. For example, we are currently taking a much closer look at the mechanisms behind polycystic kidney disease. But just seeing the power of CNNs has liberated my imagination for new project ideas. I'm sure these tools will be central to most of our future research – they are just too useful not to employ for almost any analysis task.

Just seeing the power of CNNs has liberated my imagination for new project ideas

Finally, let's move outside the lab – what do you like to do in your spare time?

SL: Our two young kids are taking up almost all of my spare time. As a family, we enjoy exploring the parts of Switzerland we haven't seen yet or just beautiful Zurich; we just love the lake.

TN: Moving to Zurich also meant moving close to the mountains, which really is a perk of living in Switzerland. Whether it's hiking, camping or learning how to snowboard, it is amazing that the mountains are only an hour's drive away and I like to get out there as much as I can.